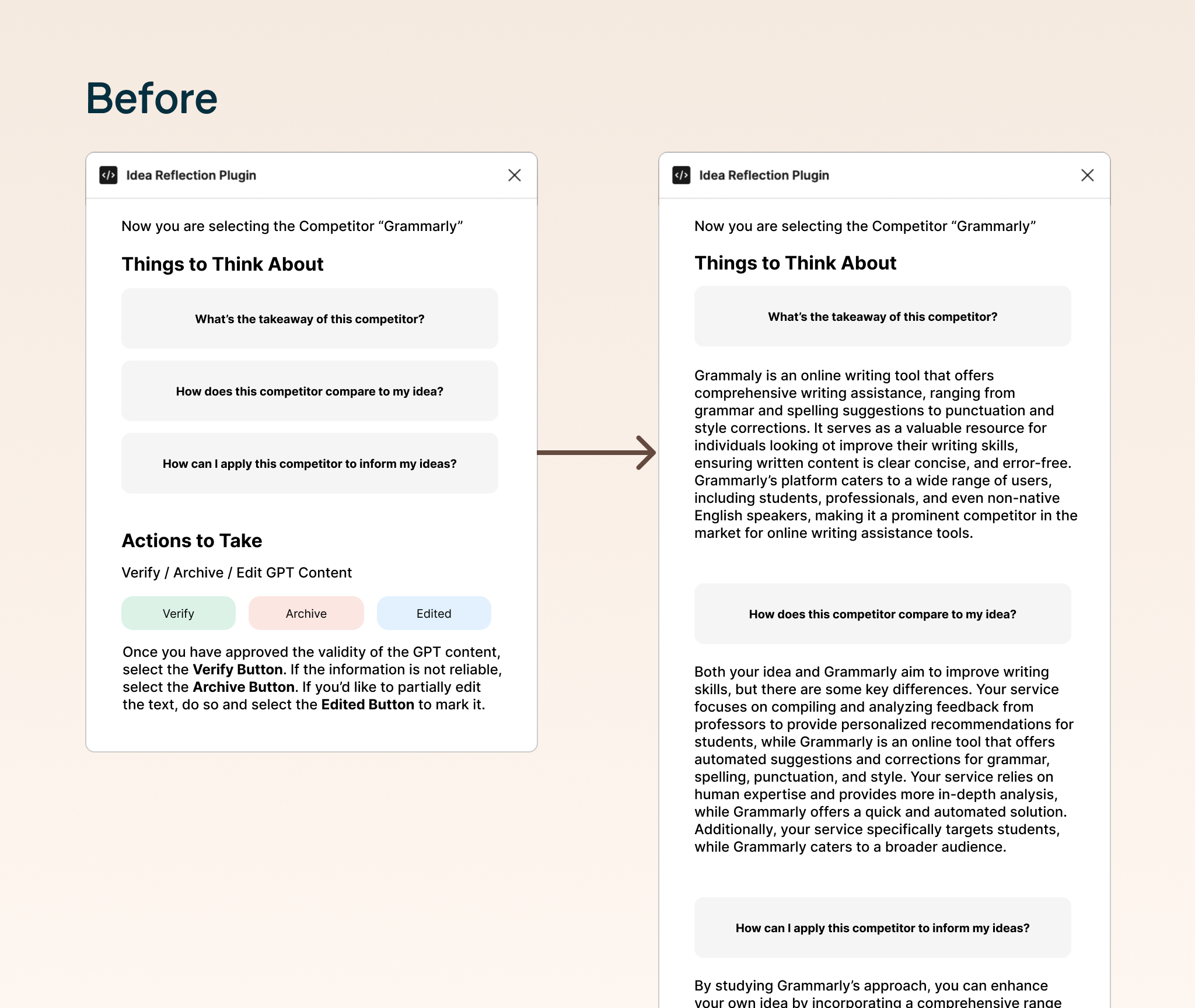

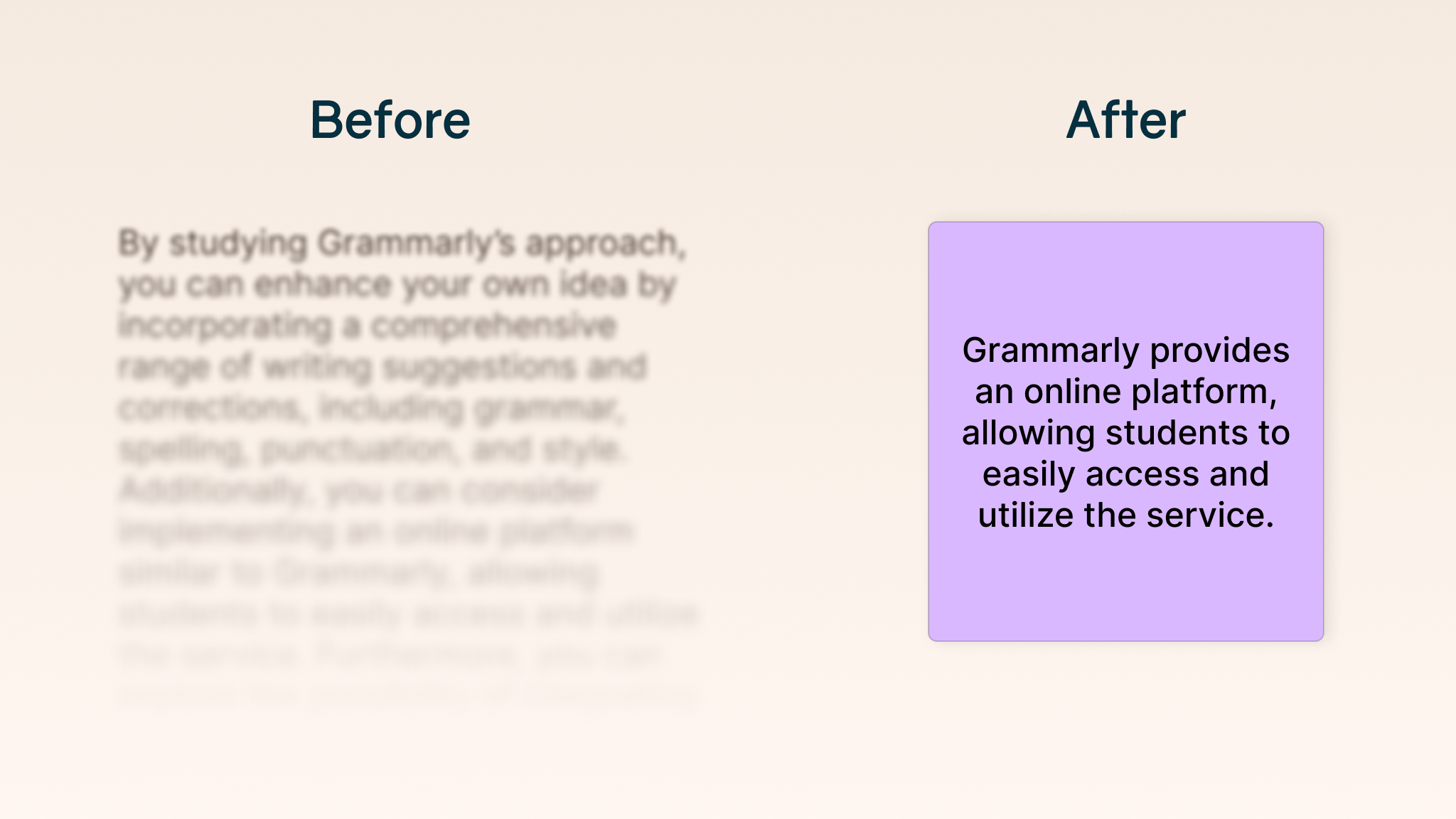

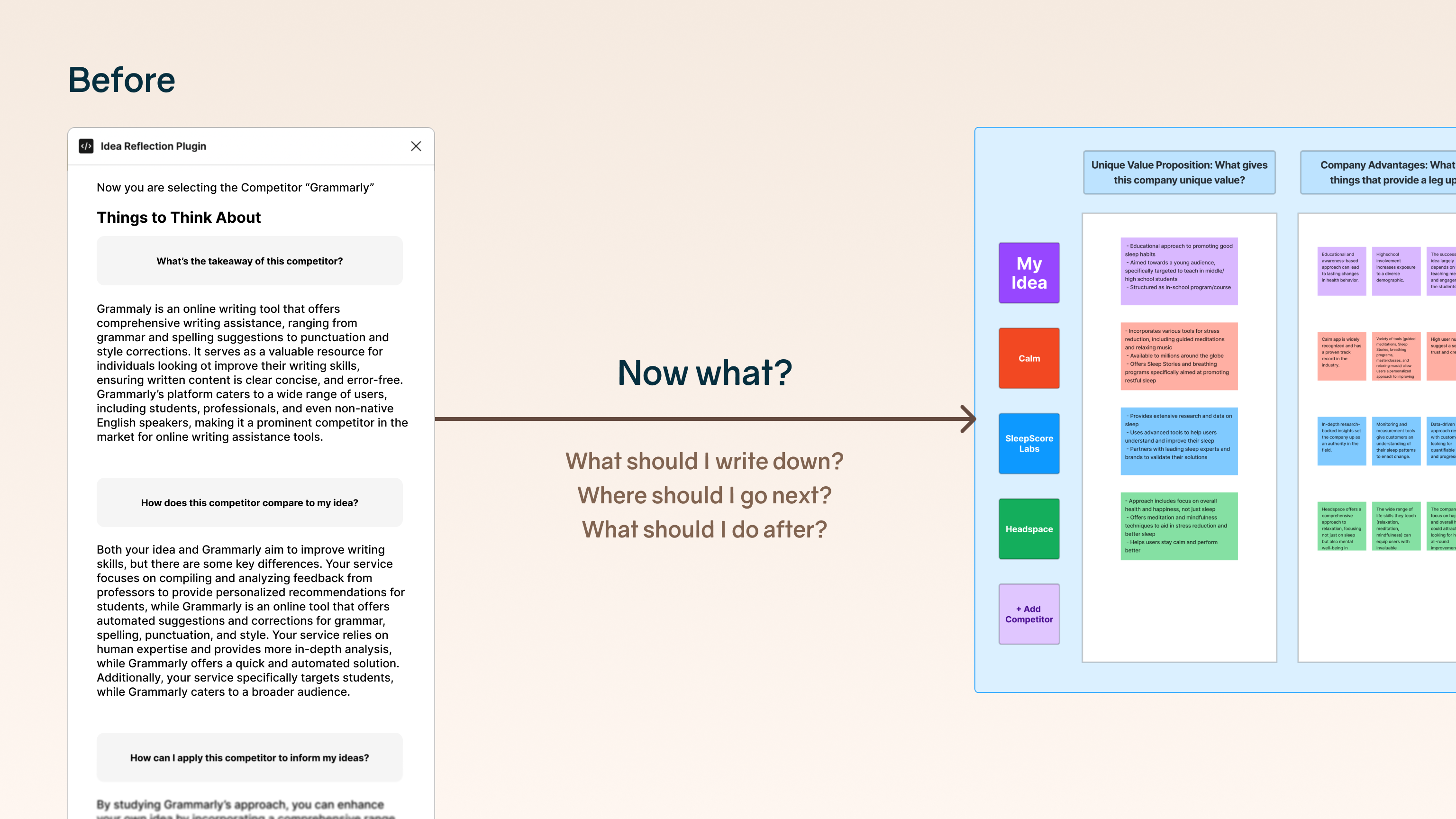

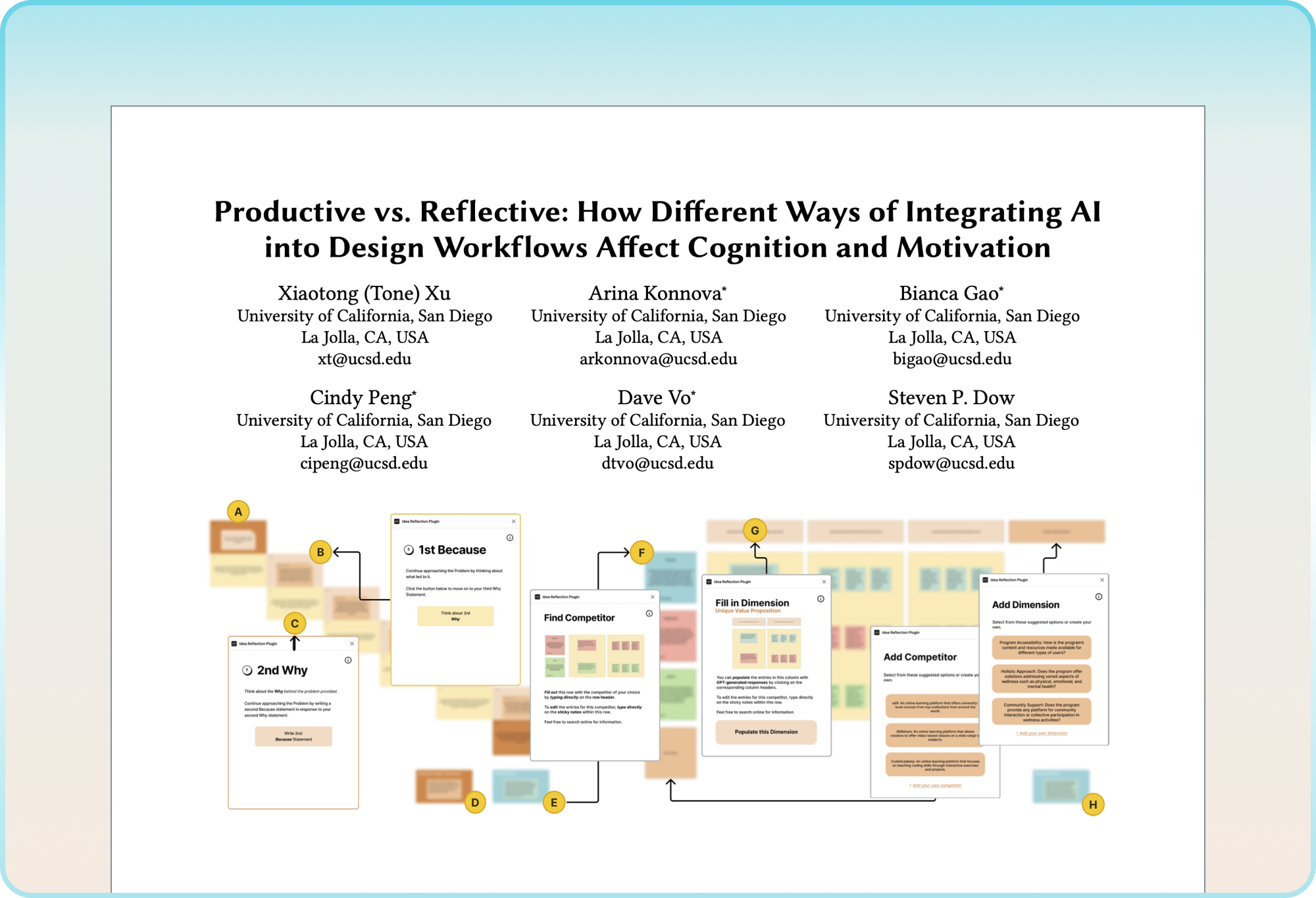

Design smarter: An adaptive plugin that guides, challenges, and strengthens your creative problem solving

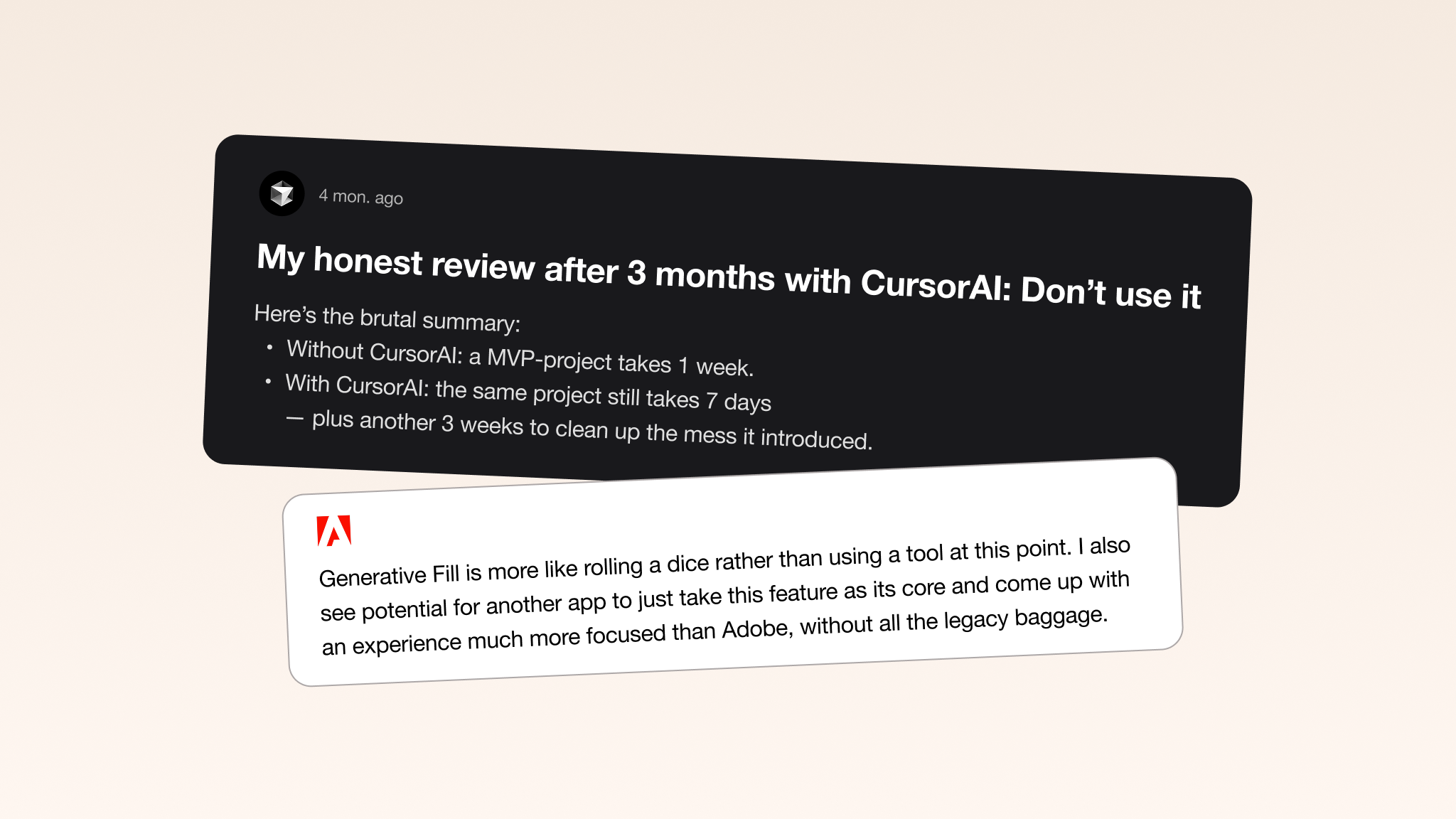

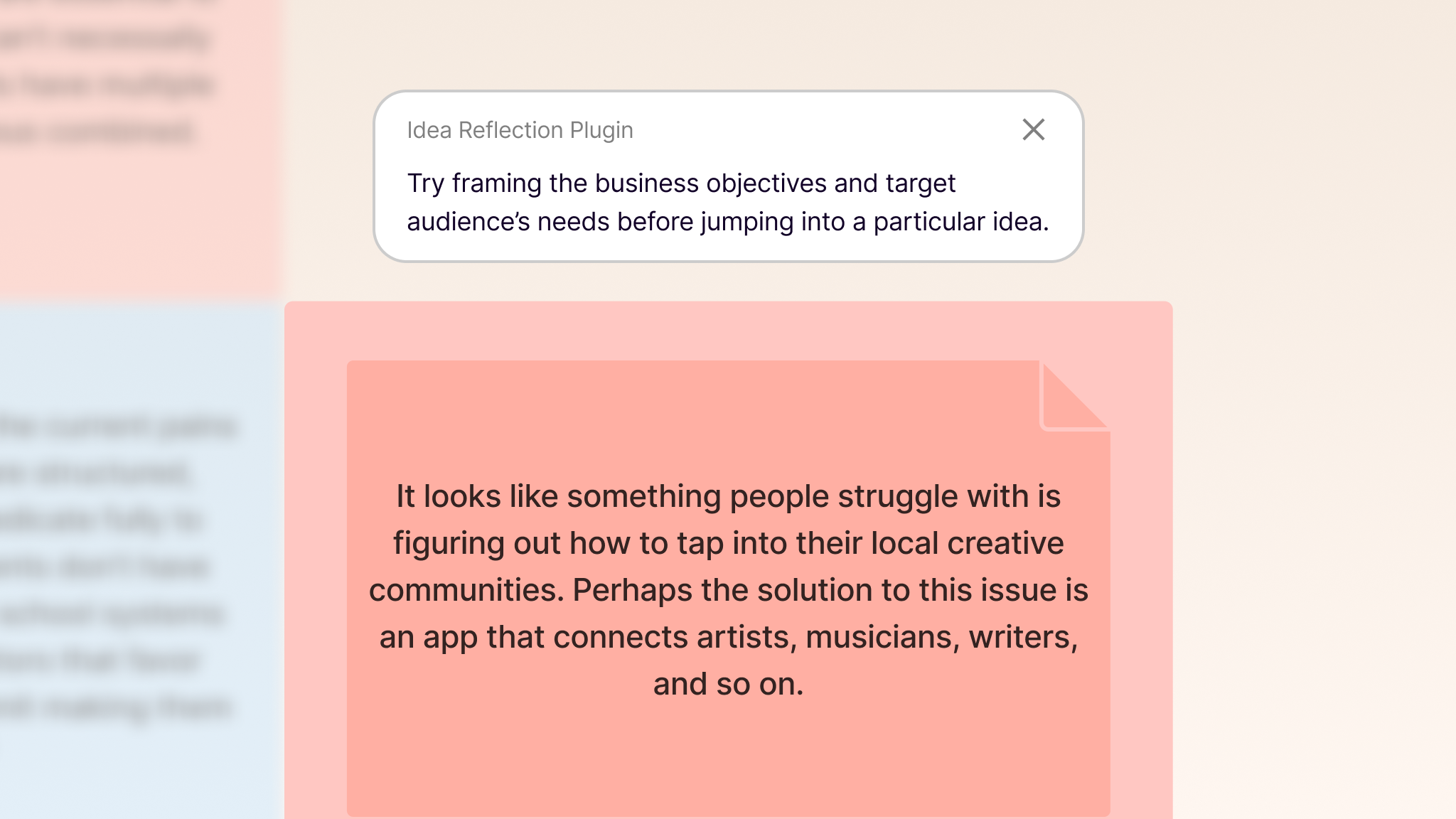

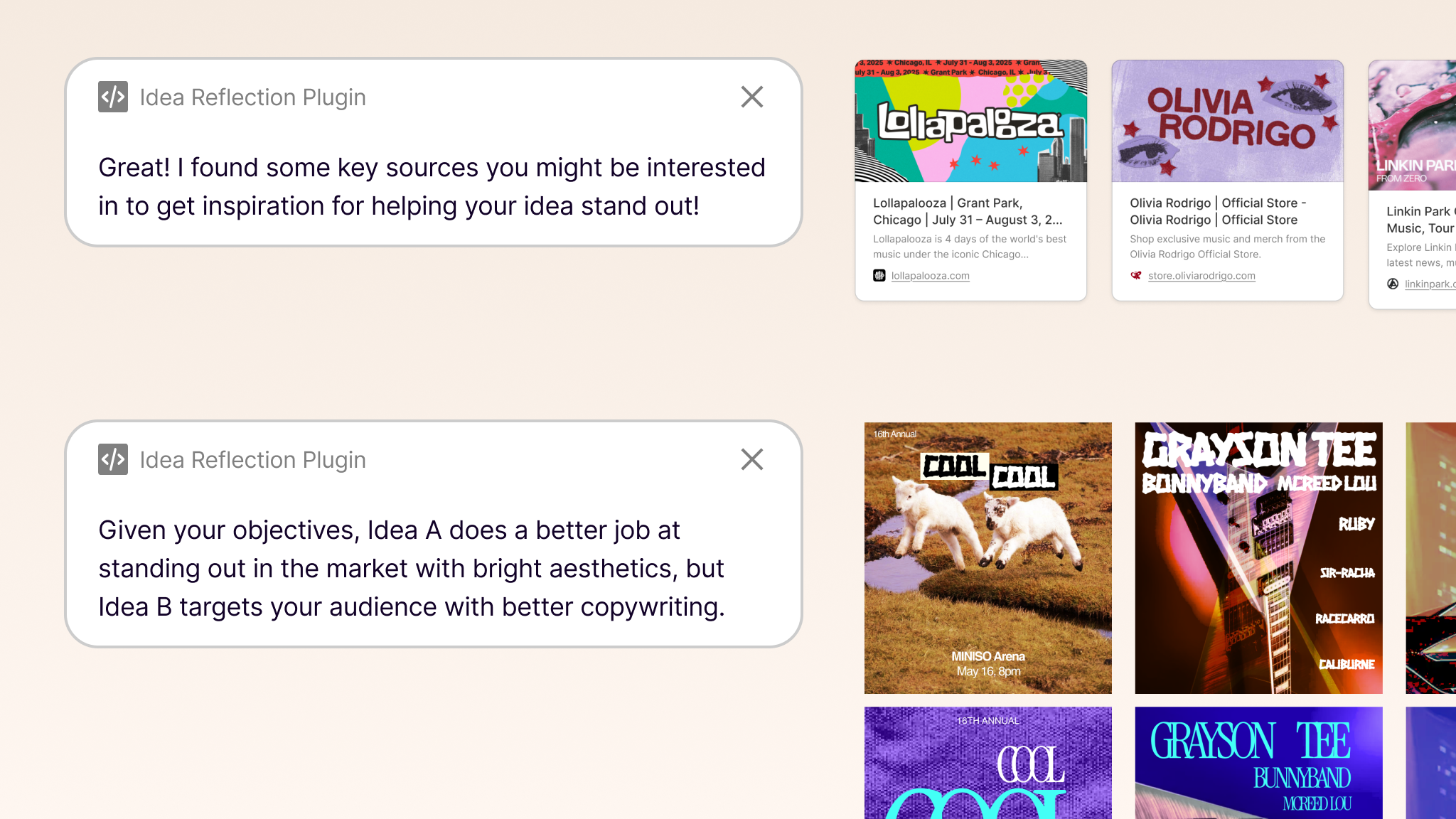

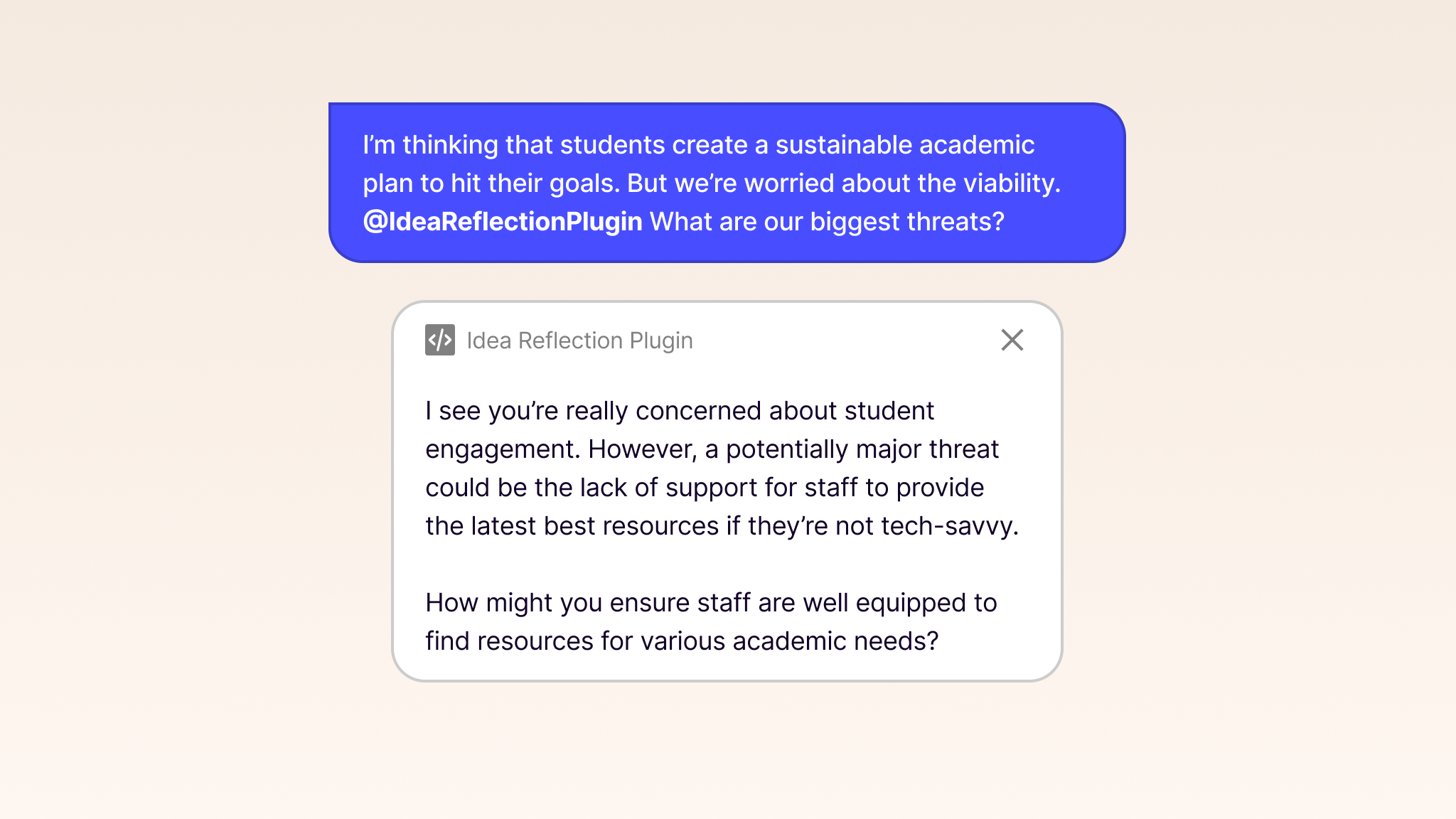

POV: Knee deep in brainstorming novel solutions? Imagine how our AI-powered plugin can leave your idea with a better value prop, with more resilience against edge cases, and more!

The work I did led to:

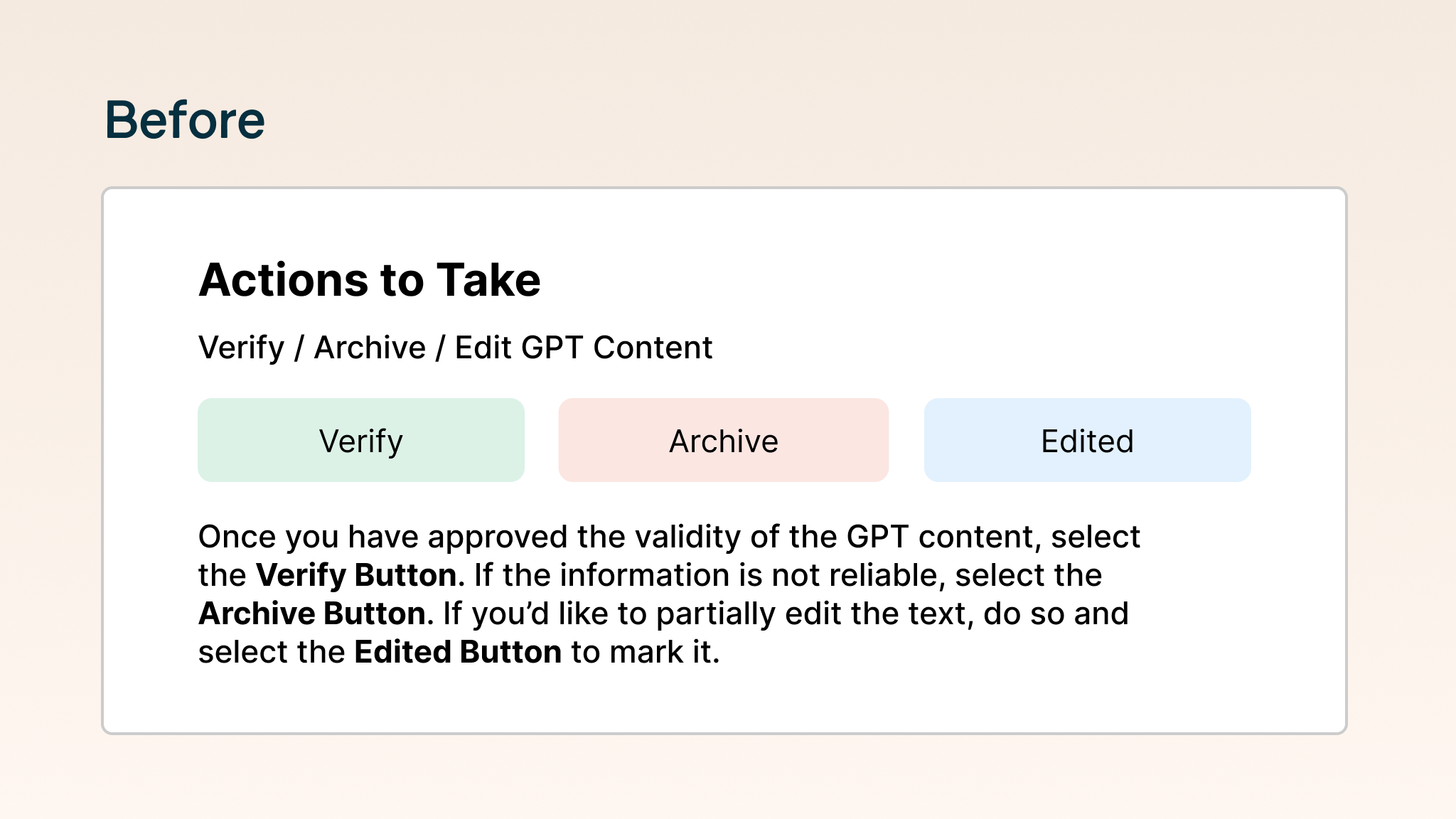

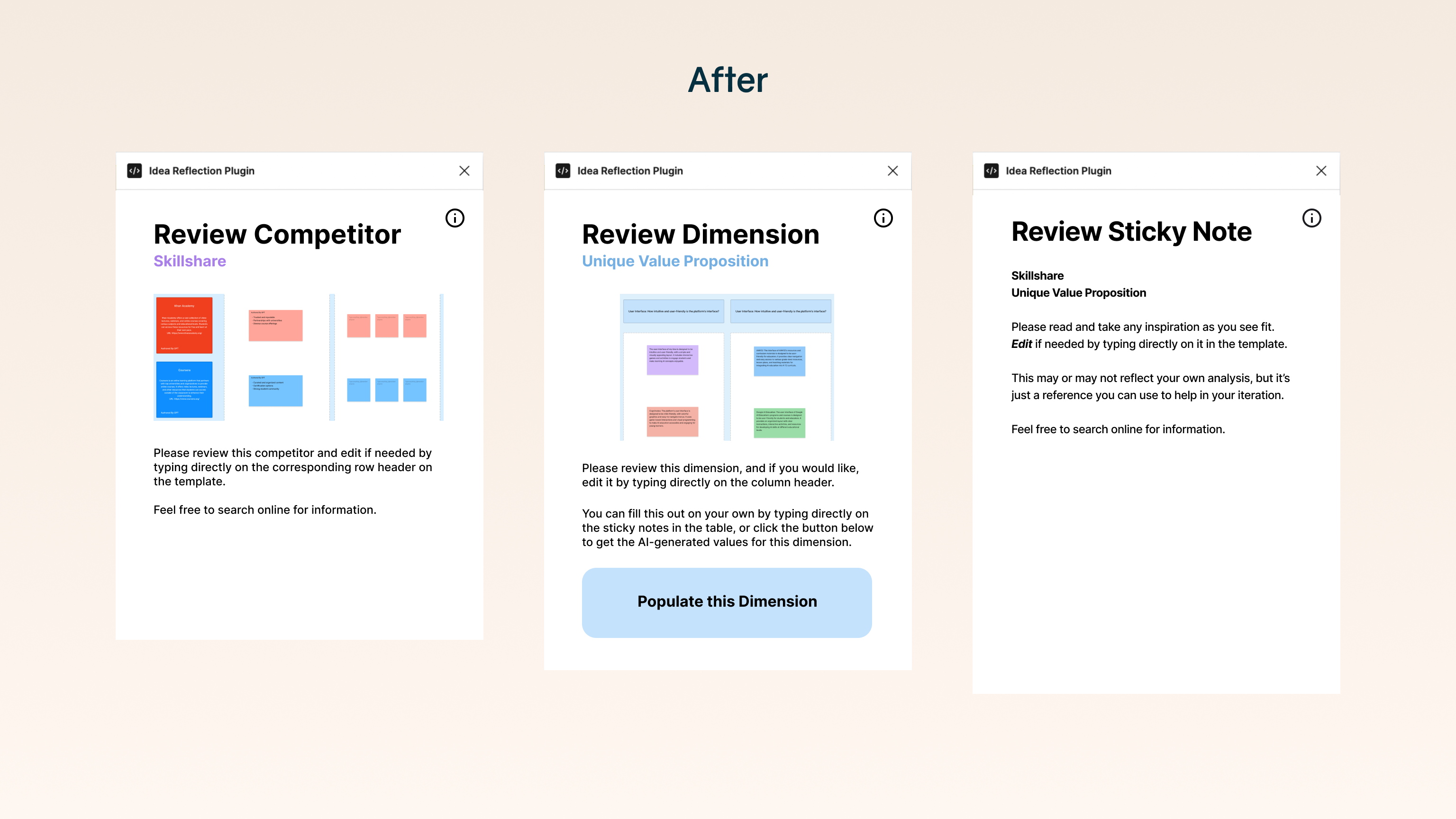

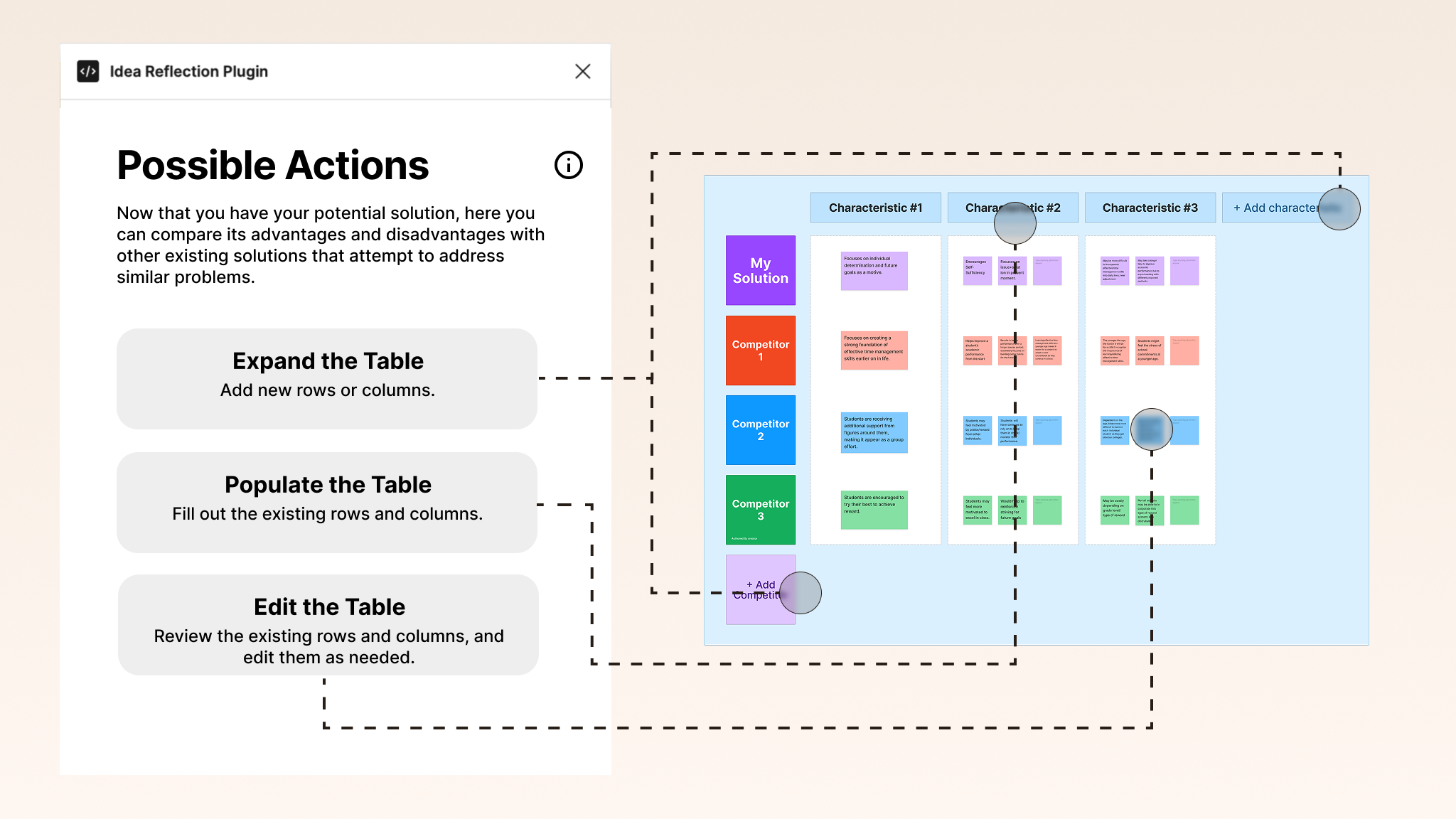

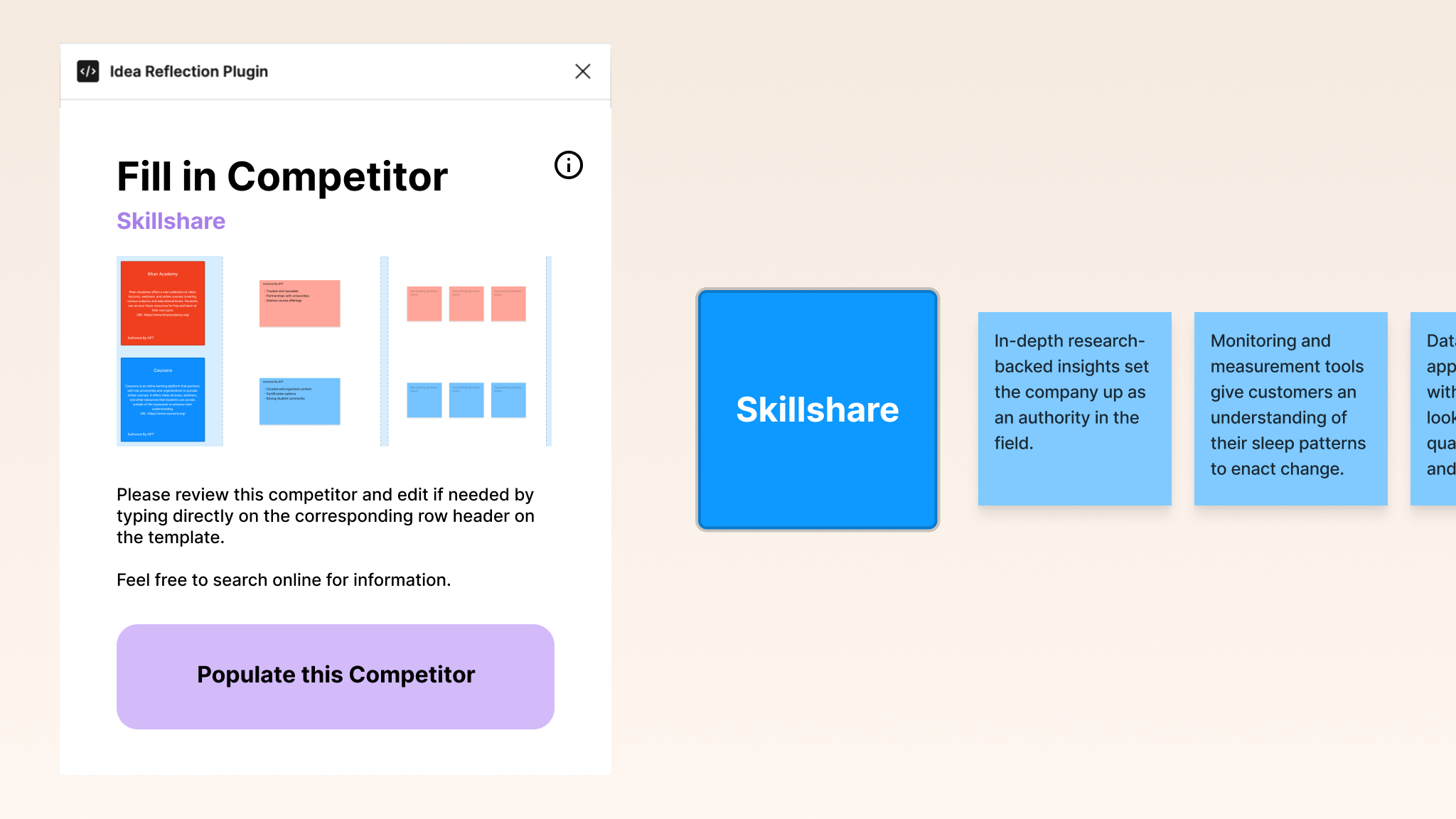

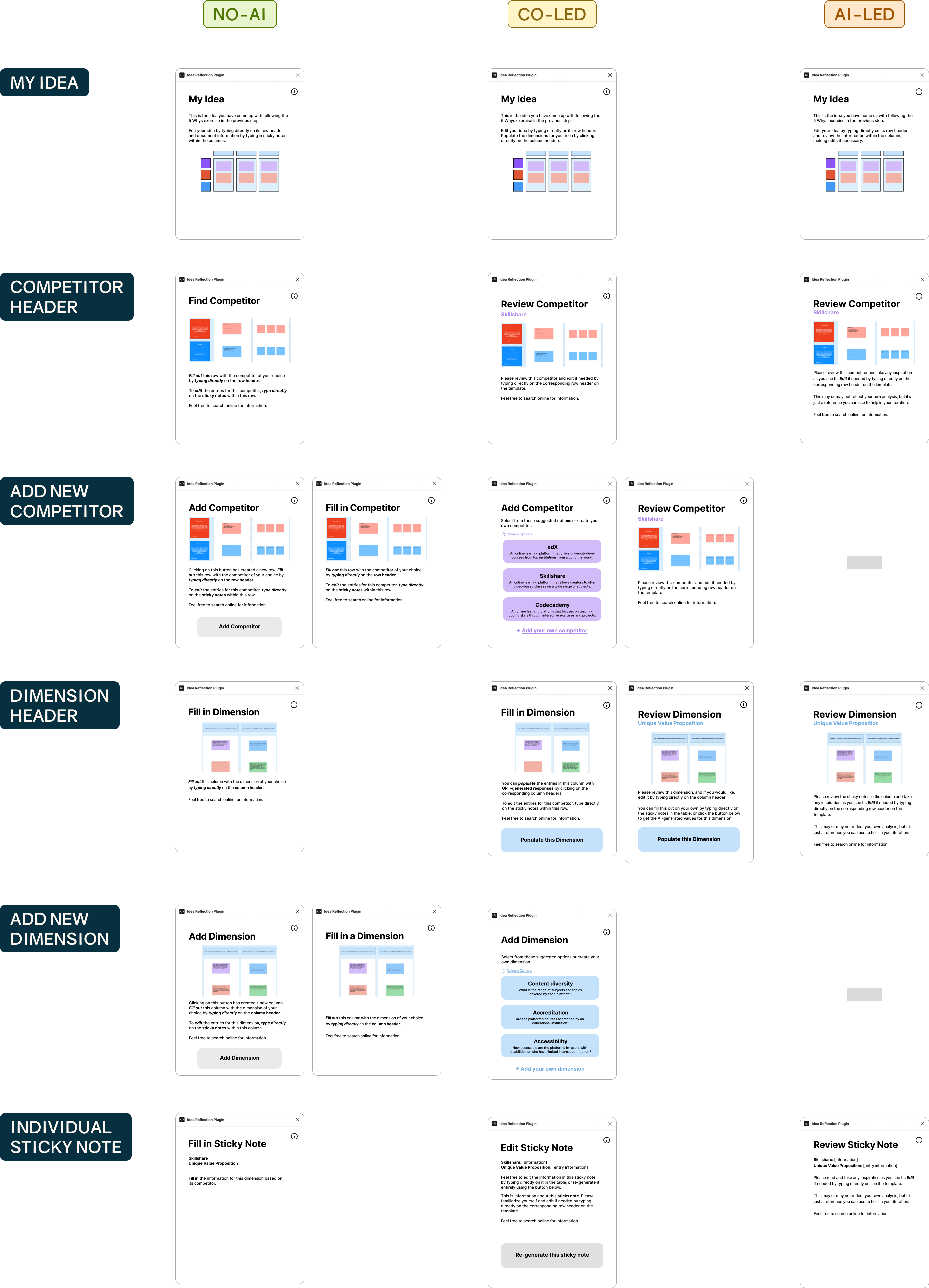

Shipped redesigned research plugin for CHI’25 research study.

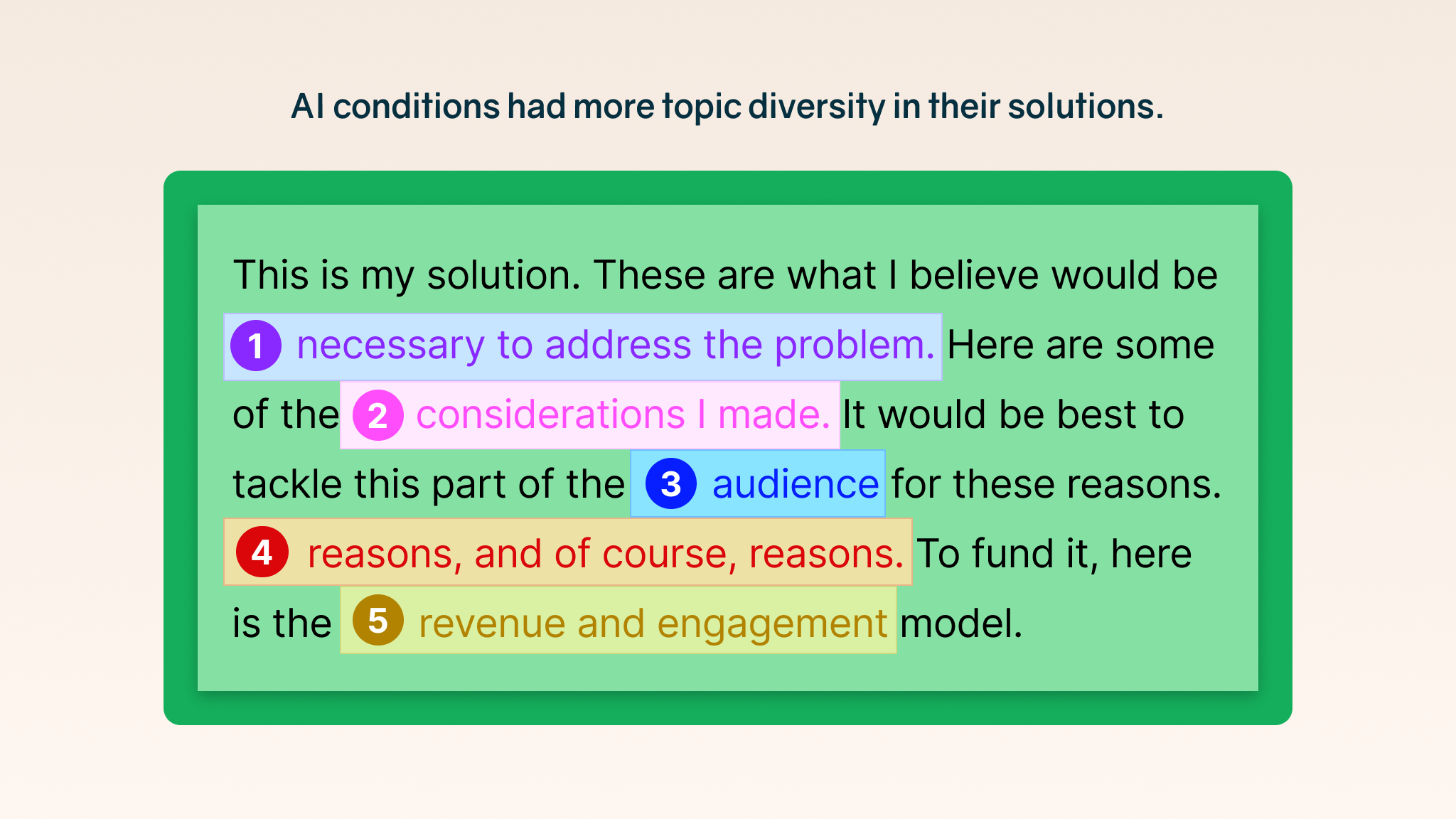

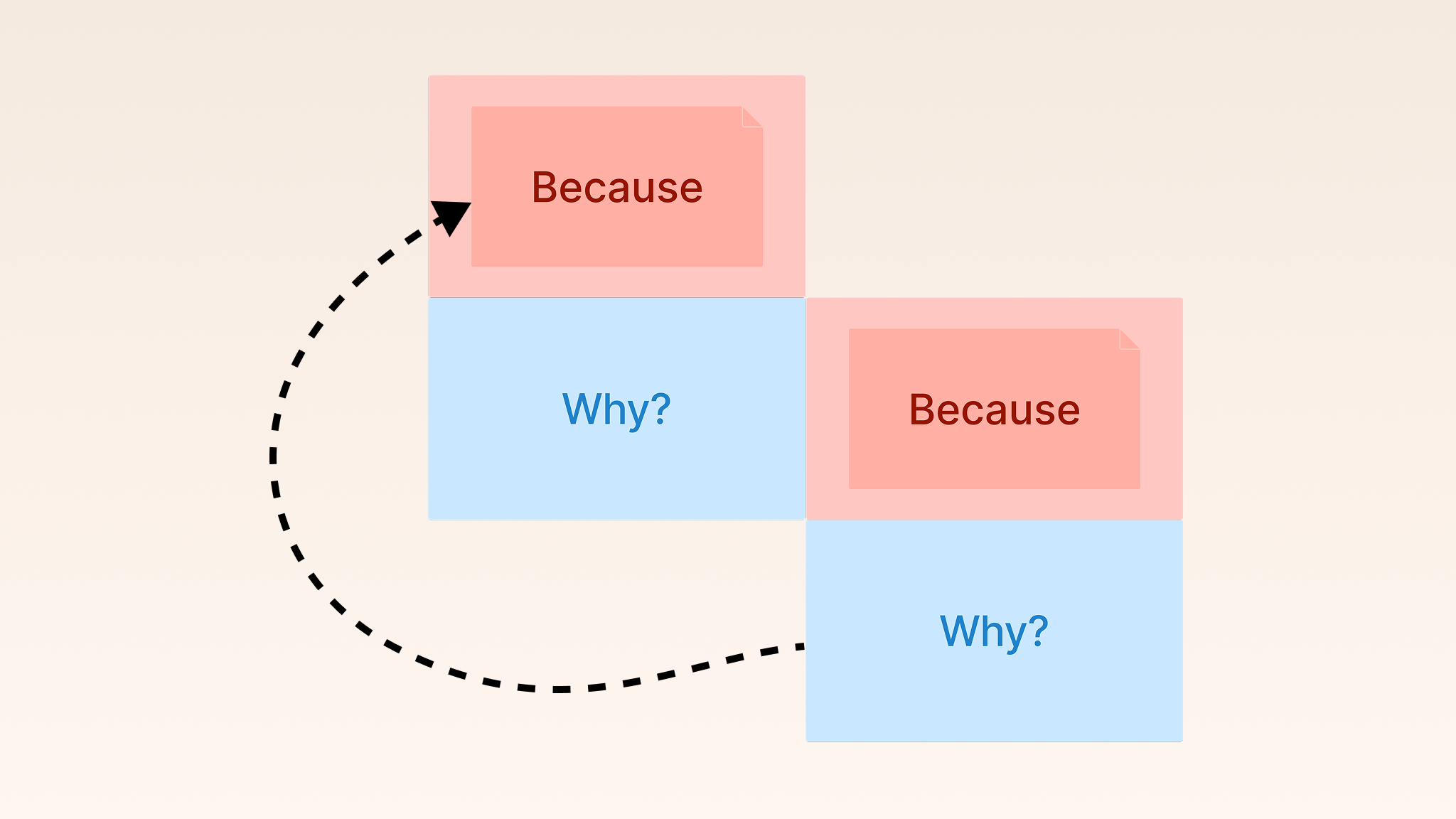

Drove 61% more topic diversity + 20% faster iteration during user sprints.